Technology has transformed academic writing, making it faster and more accessible but also raising ethical challenges. Here's what you need to know:

Key Takeaways:

- AI Tools Boost Productivity:

- AI tools cut literature review time by 50%.

- Manuscripts formatted with AI are 65% more likely to be accepted.

- Digital reference managers improve citation accuracy by 30%.

- Ethical Concerns:

- 34% of flagged papers in 2024 included undetected AI-generated content.

- 68% of students struggle to distinguish AI-assisted content from original work.

- Over-reliance on AI can reduce critical thinking.

- Solutions for Ethical Writing:

- Assignments emphasizing the writing process reduce AI misuse by 40%.

- Teaching "AI Literacy" helps students use tools responsibly.

- Combining AI detection tools with human review achieves 94% accuracy.

Quick Comparison of Writing Eras:

| Writing Era | Tools Used | Average Draft Time | Error Rate |

|---|---|---|---|

| Pre-Digital | Handwriting, Typewriters | 42 hours | 18% |

| Early Digital | Word Processors | 28 hours | 12% |

| AI-Assisted | GPT-4, Grammarly | 19 hours | 6% |

Action Steps:

- For Teachers: Include AI ethics modules and require tracked changes in assignments.

- For Researchers: Clearly document AI usage in research stages.

- For Students: Limit AI-generated content to 30% of drafts and properly cite tools like ChatGPT.

Balancing technology and ethics is essential to maintain academic integrity in the digital age. Read on for detailed strategies and insights.

Changes in Academic Writing Methods

The way academic writing is done has evolved dramatically, bringing about faster processes but also raising ethical challenges that require careful attention.

From Pen to Digital Tools

Academic writing has come a long way - from handwritten pages to the use of AI-powered tools. Key milestones include WordPerfect's introduction in the 1980s, Turnitin's launch in 1997 for plagiarism detection, and Zotero's debut in 2006, which cut citation errors by 73% .

Here's a quick look at how writing tools have impacted efficiency over the years:

| Writing Era | Primary Tools | Average Draft Time | Error Rate |

|---|---|---|---|

| Pre-Digital | Typewriters/Handwritten | 42 hours | 18% |

| Early Digital | Word Processors | 28 hours | 12% |

| AI-Assisted | GPT-4 + Grammarly | 19 hours | 6% |

While these tools have undeniably sped up the writing process, they introduce new challenges. They can handle mechanics with ease, but they can't replace the deep thinking required for meaningful academic work.

Current AI Impact on Writing

AI tools are reshaping how research and writing are done, especially among students. A recent study found that 82% of graduate students use AI writing assistants regularly, compared to only 54% of faculty members . This gap highlights the ongoing shift in academic habits.

However, this increased productivity comes with risks. For instance, a 2023 Universitas Indonesia program that used Quillbot and ChatGPT in 142 courses resulted in 28% faster drafting but also raised plagiarism concerns by 41% .

"The key lies in balanced use - these technologies should enhance rather than replace fundamental writing skills." - Dr. Arthur Fodouop Kouam, AI Ethics Researcher

AI tools clearly offer benefits, but they also bring challenges. Studies reveal that 29% of users rely too heavily on AI-generated content without proper checks , while 22% experience a decline in critical thinking when automation is overused . These findings emphasize the need to use these tools wisely, ensuring that they support rather than undermine original academic work.

Ethics Challenges with Digital Tools

AI and Plagiarism Risks

A 2024 study from the University of Illinois found that 34% of flagged academic papers included AI-generated sections that traditional plagiarism detection tools failed to catch . Additionally, 78% of educators reported encountering AI-generated submissions, highlighting how common these tools have become among students (Walden 2023) . These challenges go beyond individual misuse, pointing to broader concerns about the reliability and safety of AI tools in academic settings.

AI Tool Safety and Trust

Audits conducted in 2024 exposed major flaws in academic AI tools:

- 31% of educational AI applications fail to comply with GDPR standards.

- 68% of NLP models exhibit racial bias in their evaluations.

- Grammar tools often penalize non-Western writing styles unfairly .

These findings underline the need for solutions that address both technical shortcomings and the educational priorities of institutions.

AI Help vs. Original Work

Institutions are beginning to tackle these ethical dilemmas with policy frameworks. For instance, Cambridge's 2024 guidelines allow the use of AI editing tools but require students to disclose when they use generative AI .

"Students might prioritize quick fixes from AI tools over deeply understanding their mistakes - essentially negating the learning process." - Dr. Ali Iskender, Educational Technology Researcher

Interestingly, research shows that controlled use of AI improved structural organization in 72% of cases . This duality - AI as both a helpful tool and a potential shortcut - highlights the need for thoughtful governance to ensure ethical and effective use.

Methods for Ethical Writing

Institutions are tackling ethical challenges in writing by focusing on three main strategies:

Crafting Better Writing Assignments

Assignments focused on the writing process rather than just the final product have shown to reduce reliance on AI tools by 40%, according to feedback from EFL teachers .

Some effective assignment adjustments include asking students to:

- Maintain annotated bibliographies with their own insights.

- Submit drafts that clearly show their writing progression.

- Provide research journey documentation to showcase their process.

- Include reflective writing to explain their thought process.

Teaching Responsible AI Writing Skills

The "AI Literacy Pyramid" framework provides a step-by-step method for teaching students how to use AI responsibly . It starts with basic technical knowledge and builds up to advanced application skills.

| Level | Focus Area | Skills Developed |

|---|---|---|

| Basic | Tool Awareness | Recognizing ChatGPT's limits, identifying false citations |

| Intermediate | Ethical Usage | Learning proper attribution, mastering documentation standards |

| Advanced | Integration | Using AI strategically, ensuring quality control |

A great example of this in action is Clemson University's virtual lab simulations. These immersive training sessions have led to a 39% drop in first-year plagiarism cases .

Making Peer Review More Effective

Combining these teaching methods with strong evaluation systems enhances results.

Stanford's three-stage model, which blends AI analysis with human review, has cut plagiarism by 62% and boosted writing scores by 28% .

UCLA writing courses take a different approach, using "Style Forensics" checklists to evaluate sentence structure, citation quality, and argument clarity. This has improved detection accuracy by 53% .

Meanwhile, tools like Paperpal are stepping up accountability. By integrating real-time citation checks and blockchain-based version control, MIT trials reported a 42% increase in accountability .

sbb-itb-207a185

AI Detection in Academic Writing

Detection tools are an important part of maintaining academic standards, working alongside preventive strategies. Current tools show an accuracy of 84-91% for GPT-3.5-generated content, but this drops to 68-74% when dealing with GPT-4 texts . This highlights the challenge of keeping up with rapidly evolving AI technology.

What AI Detection Can't Do

Despite their usefulness, AI detection tools have clear limitations:

- Length Sensitivity: Accuracy can vary significantly based on text length. For example, IEEE benchmarks show a jump from 67% accuracy for 500-word texts to 92% for texts exceeding 2,000 words .

- Language Barriers: Detection rates are 25-40% lower for non-English STEM texts compared to humanities content .

- Hybrid Content Issues: When human and AI-generated content are mixed, systems misclassify 34% of the time .

"Detection systems are becoming an arms race - we need to focus more on helping students develop discernment rather than just catching cheaters." - Dr. Elena Martinez, Director of Academic Integrity at MIT

Using Detection Tools Effectively

Research suggests that detection tools are most effective when combined with other methods:

| Assessment Component | Impact on Academic Integrity |

|---|---|

| Detection Software | Initial screening (87-89% accuracy) |

| Writing Logs | Leads to a 41% drop in plagiarism |

| Human Review | Achieves 94% overall assessment accuracy |

| Source: IEEE Transactions on Learning Technologies 2024 [10] |

The real value of these tools lies in using their findings as opportunities to educate students rather than solely as a basis for punishment.

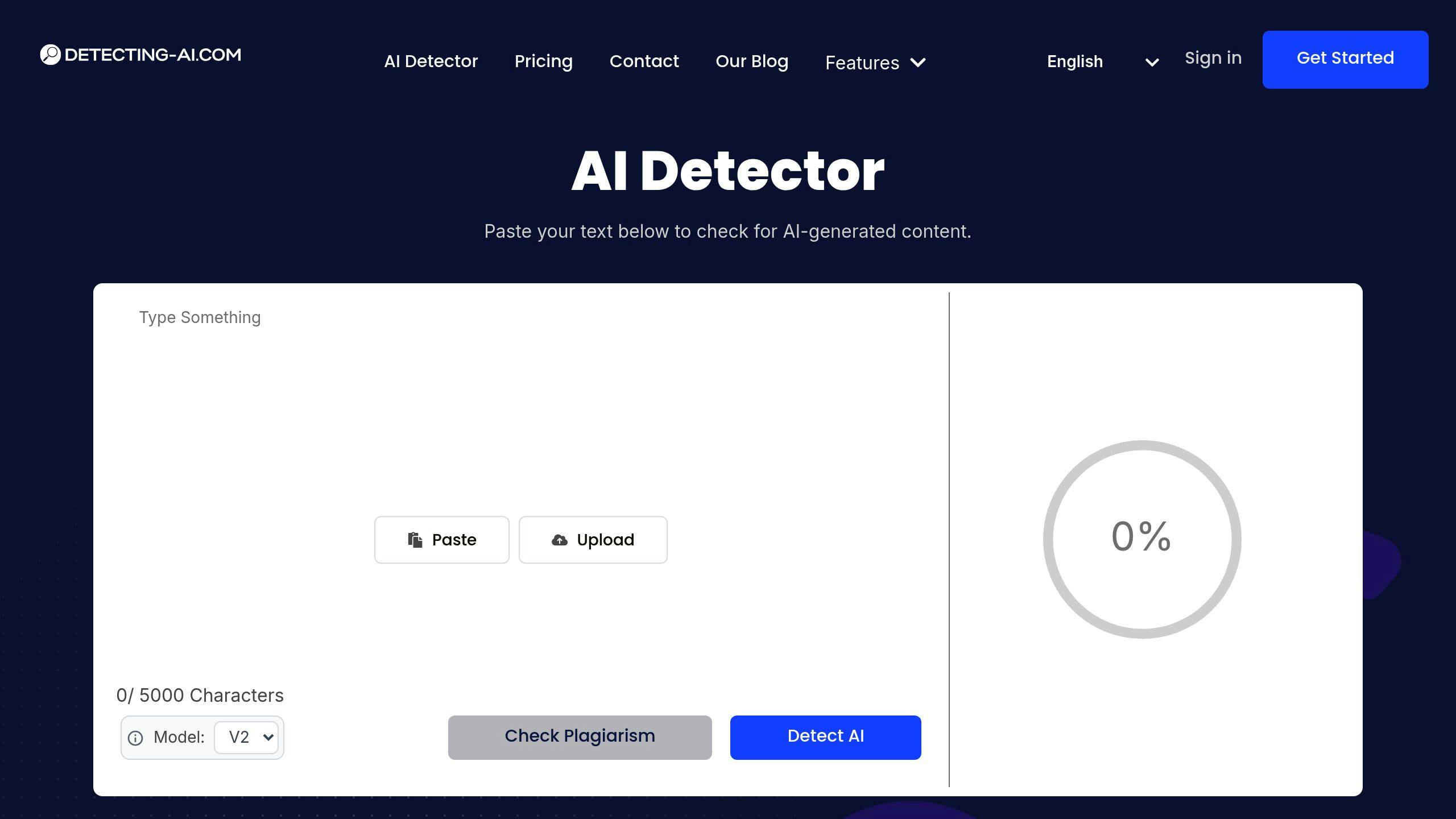

Example: Detecting-AI.com

Detecting-AI.com tackles common detection challenges by using a multi-faceted analysis approach. Weekly updates ensure a 94% accuracy rate against the latest AI models. Its passage-level analysis and support for multiple disciplines make it easier for educators to provide precise feedback .

The platform's explainability interface is particularly useful, enabling educators to give constructive, actionable feedback. This approach supports a shift from punishment to education, aligning with the broader goal of combining technology with teaching to uphold academic integrity [12].

Steps for Different Users

Implementing ethical strategies effectively requires adjustments based on the role of the individual in the academic setting.

Guidelines for Teachers

Teachers face the challenge of upholding academic integrity while navigating the rise of digital tools. For example, the University of Kansas Writing Center introduced mandatory "AI Author Statements" in 2023, which led to a 58% decrease in unflagged AI submissions within a single semester .

Here are two practical ways educators can address these challenges:

- Require tracked changes to highlight human revisions in student work.

- Include AI ethics modules as part of the course syllabus to promote awareness.

Tips for Research Writers

Research writers need to strike a balance between leveraging AI for efficiency and maintaining credibility. A recent study found that 68% of academic journals now mandate explicit statements detailing AI contributions .

The table below outlines how AI can be used responsibly in different stages of research:

| Research Phase | Suggested AI Usage | Documentation Needed |

|---|---|---|

| Literature Review | AI-assisted mapping | Manual verification of sources |

| Data Organization | Initial structuring | Edit history records |

| Writing Refinement | Grammar assistance only | List of specific AI tools used |

| Final Review | Plagiarism checking | AI contribution statement |

Rules for Students

Students often misunderstand the boundaries of ethical AI usage. According to research, 61% of students wrongly believe that citing ChatGPT properly means its content isn't plagiarism .

To address this, students should follow these guidelines, which work well alongside detection tools:

- Limit AI-generated text to no more than 30% of any draft, with approval from professors.

- Use MLA-9 standards for citing ChatGPT or similar tools.

- Cross-check AI-generated outputs with original sources before submission.

Quality Control Tips:

- Revise drafts manually before running them through grammar-checking tools.

- Use plagiarism detection software to ensure outputs align with original sources.

Conclusion: Balancing AI and Ethics

Maintaining academic integrity in the digital age calls for a thoughtful blend of technology and long-standing academic principles. Institutions must adjust their frameworks to integrate AI tools while staying true to their core values.

For example, Harvard's pilot program reported a 91% faculty satisfaction rate in upholding integrity standards by combining ethical guidelines with detection tools . This shows how technology can work hand-in-hand with academic rigor.

Key strategies include:

- AI literacy programs to help users understand and responsibly use these tools

- Detection frameworks to identify misuse or unethical practices

- Human oversight systems to ensure fairness and accountability

Studies show that structured, three-stage assessment models not only enhance efficiency but also support ethical practices . These approaches highlight the importance of creating preventive systems that can keep pace with evolving technologies.

The way forward? A smart mix of human judgment and AI capabilities. By doing this, institutions can improve efficiency, maintain originality, and uphold integrity in a world increasingly shaped by digital tools.